Breaking free of the legacy straitjacket - modernizing insurance systems

SageSure’s growth story has been phenomenal, and it has been a challenge for our applications to keep up with business expansion. Early on, SageSure invested in building applications based on relational database (RDBMS) technology. Core policy functions like quoting, binding, policy administration, endorsements, and billing relied on a tapestry of stored procedures, triggers, and application-level code. A few years back our engineering team identified Temporal Workflows as a powerful solution in modernizing our intricate, stateful business processes.

Challenges of RDBMS-driven workflows

The growth in our business has introduced specific complexities that exacerbates the pain points of traditional RDBMS-managed workflows:

-

Rapid Product Development: In dynamic insurance markets, rules and regulations change frequently, and new product offerings are crucial for our competitive advantage. The tight coupling of business logic to stored procedures significantly slows down the speed at which we can introduce and iterate on new products or pricing models.

-

Catastrophic Event Scalability: Following a hurricane or other major weather event, claims volume can spike exponentially. Our DB-driven, monolithic systems become bottlenecks, leading to delays in processing critical claims and impacting our policyholder satisfaction during their greatest time of need.

-

Audit and Compliance: Tracing the exact sequence of events for regulatory compliance or internal audits in a DB-driven workflow often means piecing together scattered data points.

-

Retry Logic for External Integrations: We rely heavily on external services like payment processing, geolocations and more. Implementing robust retry mechanisms with back-off for these critical external calls within a DB-driven system is incredibly challenging and a common source of subtle, hard-to-diagnose failures.

-

Limited Real-time Operational Visibility: Gaining real-time insights into the progress of a complex commission payment system or a high-volume policy registration is challenging. Identifying bottlenecks or policy application fallouts often relies on batch reporting or deep database queries, hindering our proactive intervention.

Enter Temporal: durable execution

Temporal is an open-source, distributed system that enables us to write durable, fault-tolerant, and scalable workflows as code. Instead of relying on a database for state management and orchestration, Temporal provides a dedicated platform that ensures our workflow executions are:

-

Durable: Workflow state is automatically persisted, so even if our services crashes, the workflow execution will resume exactly where it left off. No lost data, no manual recovery of in-flight policies is needed.

-

Fault-Tolerant: Temporal handles retries, timeouts, and error handling automatically for external calls (e.g., to third-party data providers, payment processors, or external rating engines). We define the business logic, and Temporal ensures it executes reliably, even in the face of transient network issues or external system outages.

-

Scalable: Temporal is designed for high-throughput, long-running workflows, allowing us to scale our underwriting, policy processing, and high-volume claims handling independently of our application’s compute resources, crucial during CAT events.

-

Observable: Every workflow execution has a complete, queryable event history, making it incredibly easy to debug, audit for compliance, and understand the real-time status of any policy application, claim, or billing event from initiation to completion. No more playing detective with fragmented logs; the full story is always there.

Legacy services migration

Migrating the intricate, stateful processes within our legacy RDBMS based applications to Temporal typically involves identifying and extracting the core business processes currently orchestrated within the database and application code. Here’s our conceptual approach:

-

Identify Core Workflows: We analyzed our existing systems to pinpoint sequences of operations that represent a complete business process (e.g., “New Policy Application, Underwriting & Issuance,” “Claims management,” “Premium Billing & Collection with Payment Plan Management,” “Policy Endorsement & Mid-term Adjustments”). These are our prime candidates for Temporal Workflows.

-

Decouple and Extract Logic:

-

Stored Procedures/Triggers: We converted the complex business rules and data manipulation logic embedded in the stored procedures and triggers into independent Temporal Activities. Activities are typically short-lived, idempotent operations that interact with external systems, like our existing database, other microservices, or third party APIs.

-

Application-Level State & Orchestration: We replaced the application code that manages policy or payment or endorsement state in database tables often with complex status machines and polling loops with Temporal’s durable workflow execution.

-

-

Define Temporal Workflows: We wrote our core insurance processes as Temporal Workflows using Temporal’s Java SDK. Our Workflow code would orchestrate the execution of Activities, representing the steps of our insurance process.

-

Implement Temporal Activities: We created the Activity implementations that encapsulate the specific actions. These Activities contain the actual calls to our database (e.g., updating policy statuses), interacting with legacy systems via APIs (e.g., integrating with existing policy admin systems or billing platforms), or connecting to new microservices (e.g., High Definition property pictures).

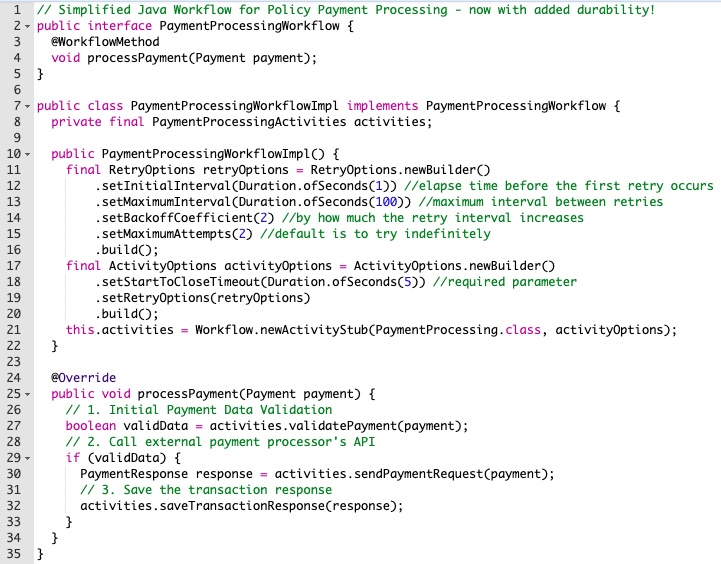

Example: policy payment processing workflow using Java

Temporal Batch Iterator pattern for large-scale data processing

One of the most challenging aspects of migrating our legacy insurance systems is dealing with vast amounts of existing data that needs to be processed, for example, monthly statement generation. Traditionally, this involved complex, fragile batch jobs, often orchestrated via database agent or custom scripts, which are prone to failure and difficult to monitor.

Temporal’s Batch Iterator Pattern provides a powerful, durable, and observable way to handle these large-scale data processing tasks. Instead of trying to process a large dataset within a single, long-running workflow, the pattern works as follows:

-

Orchestrator Workflow: A main “Orchestrator” Workflow is responsible for:

- Querying our database via an Activity to identify a batch of records to process.

- Spawning a separate Child Workflow for each individual record or small group of records within that batch e.g., one child workflow per policy payment record.

- Maintaining its own state to track which batches have been processed and to aggregate results from the child workflows.

-

Child Workflows: Each Child Workflow handles the processing logic for its specific record. These child workflows benefit from all of Temporal’s durability, fault tolerance, and retry capabilities.

-

Durable Iteration: If the Orchestrator Workflow or the Activity querying the database fails, Temporal ensures it resumes from the last known state. It won’t re-process records that have already had child workflows spawned for them, and it can pick up the next batch seamlessly. This significantly increases the reliability of large-scale batch operations that were once a source of significant operational overhead.

The Batch Iterator pattern is ideal for tasks like:

-

Mass Policy Renewals: Spawning a workflow for each policy due for renewal, managing complex premium calculations and endorsements.

-

Statement/Billing Cycle Generation: Creating a workflow per customer to generate their annual statement or manage complex installment billing..

-

Data Migration & Transformation: Processing millions of legacy records to migrate them to a new system or format, ensuring each record is processed durably.

-

Regulatory & Compliance Reporting: Generating individual reports or performing checks on a large number of entities.

Sequence diagrams illustrating the Batch Iterator Pattern:

Explanation of sequence diagram 1

-

A user or an application kicks off our main “Orchestrator Workflow”.

-

The Orchestrator Workflow runs in a loop. In each iteration, it calls an Activity, executed by an Activity Worker.

-

This loadData Activity interacts with the database to fetch a defined “batch” of records, often using pagination.

-

Once a batch is received by the Orchestrator, it then, for each record in that batch, starts a Child Workflow.

-

Each Child Workflow is a separate, independent, and durable execution that handles the specific processing for that single record.

-

The Orchestrator maintains its internal state (like lastProcessedId) to ensure that if it fails and restarts, it knows where to resume fetching the next batch without duplication.

-

The loop continues until no more records are found in the legacy database.

Explanation of sequence diagram 2

-

This diagram shows a detailed view of what happens within a single Child Workflow (e.g., Payment Processing Workflow).

-

It orchestrates a series of Activities like processing a payment or sending email communication..

-

Each Activity interacts with an external service – here, our PaymentProcessingService and EmailService.

-

Temporal’s built-in retry mechanisms would handle transient failures for any of these Activities, ensuring the overall policy payment or email communication processes are robust

-

Upon completion, the Child Workflow’s status is known by the Temporal Server, which can be observed by the Orchestrator.

Key benefits of Temporal in SageSure’s modernization journey

-

Reliability & Durability: Temporal guarantees workflow execution to completion, even if our systems crash. This is crucial for financial integrity, regulatory compliance, and maintaining policyholder trust.

-

Simplified Complexity for Business Logic: Complex retry logic for external integrations, long-running processes, and compensation patterns are built into Temporal’s programming model. This significantly reduces the amount of fragile, boilerplate code we need to write and maintain.

-

Improved Scalability: Decouple our core business logic from database contention. Temporal scales horizontally, allowing us to handle a massive number of concurrent policy applications, complex billing cycles, ensuring responsiveness and operational continuity even during peak demand.

-

Accelerated Product Innovation: We can write complex insurance processes as clear, imperative code. The clean separation of Workflow and Activity logic makes it easier for our product managers, and developers to quickly design, test, and deploy new insurance products, adjust commission rules, or modify existing claims processes to respond to market changes.

-

Future-Proofing Tech: We can move away from a monolithic, tightly coupled design towards a more distributed, microservices friendly architecture, paving the way for advanced geospatial analytics, AI-driven risk assessment, real-time data integration, and other future modernization efforts crucial for a leader in the Insurance Tech space.

Conclusion

Migrating our core workflows from legacy RDBMS based applications to Temporal was not just about fixing immediate problems; it was about transforming the fundamental way SageSure operates. It liberated our critical business logic from the confines of outdated architectures, allowing us to innovate faster, improve customer experience, reduce operational risk, and build systems that are ready for the demands and evolution of the modern Insurance Tech landscape.